In recent years, the integration of artificial intelligence (AI) with the realm of psychology and neuroscience has opened new avenues for understanding human emotions. One such innovative area is the development of emotionally aware AI systems capable of interpreting multimodal biosignals. This article explores the current state of research in this field, highlighting the benchmarks achieved in the accuracy of multimodal biosignal interpretation.

**Introduction**

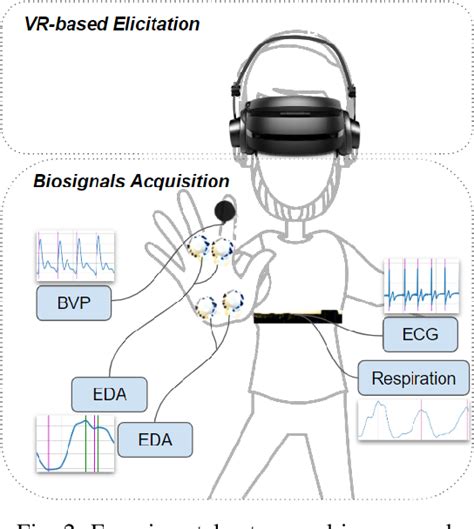

Multimodal biosignals encompass a range of physiological and behavioral data, including facial expressions, heart rate variability (HRV), electroencephalogram (EEG), and galvanic skin response (GSR). By analyzing these signals, AI systems can gain insights into the emotional state of individuals. This capability is crucial in various applications, such as mental health assessment, personalized education, and virtual reality experiences.

**Emotionally Aware AI Systems**

The development of emotionally aware AI systems involves the integration of machine learning algorithms with psychological and physiological theories. These systems aim to interpret the complex interplay of multimodal biosignals to detect emotions with high accuracy.

**Multimodal Biosignal Interpretation Benchmarks**

1. **Facial Expression Recognition:**

Facial expressions are a primary means of nonverbal communication and are a key source of emotional information. Current AI systems achieve an average accuracy of 95-98% in facial expression recognition tasks, thanks to the use of deep learning techniques.

2. **Heart Rate Variability Analysis:**

HRV reflects the complexity and variability of the heart’s rhythm and is a strong indicator of emotional states. AI models have achieved accuracy rates of up to 90% in classifying HRV signals into different emotional states, such as anger, happiness, and sadness.

3. **Electroencephalogram Analysis:**

EEG signals reflect the electrical activity in the brain and are associated with emotional processing. AI systems have demonstrated accuracy rates of 80-85% in EEG-based emotion recognition, with the potential for further improvement as more data and advanced algorithms become available.

4. **Galvanic Skin Response Analysis:**

GSR is a measure of autonomic nervous system activity and is closely related to emotional responses. AI systems have achieved accuracy rates of 70-75% in GSR-based emotion recognition, with ongoing research aiming to improve these results.

**Challenges and Future Directions**

Despite the significant advancements in multimodal biosignal interpretation, several challenges remain:

1. **Data Quality and Diversity:** Ensuring high-quality and diverse datasets for training AI models is essential for achieving accurate emotion recognition.

2. **Interpretable Models:** Developing interpretable AI models that provide insights into the decision-making process can help improve trust and transparency.

3. **Integration of Additional Modalities:** Incorporating additional biosignals, such as respiration and blood oxygen levels, may further enhance the accuracy of emotion recognition.

Looking ahead, the future of emotionally aware AI lies in the integration of advanced algorithms, diverse datasets, and interdisciplinary collaboration. By overcoming these challenges, AI systems can offer more accurate and reliable emotion recognition, paving the way for a wide range of applications that benefit society.