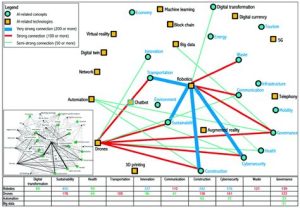

In the year 2030, the world is on the brink of a technological revolution. Artificial intelligence (AI) has permeated every aspect of human life, from the mundane to the extraordinary. While AI has undeniably improved our quality of life, it has also raised ethical concerns about the manipulation of human emotions. This article explores the concept of synthetic emotion ethics and proposes laws to protect individuals from AI manipulation of human feelings.

**The Emergence of Synthetic Emotions**

Synthetic emotions are a new frontier in AI research, where machines are designed to mimic human emotions in a bid to provide a more personalized and engaging user experience. These emotions are generated through complex algorithms that analyze human behavior and preferences, and then create corresponding emotional responses in AI systems.

However, the ability to manipulate human emotions raises significant ethical concerns. The potential for abuse is vast, from targeted advertising to election manipulation, and even the creation of virtual relationships that could undermine real human connections.

**Ethical Challenges in Synthetic Emotions**

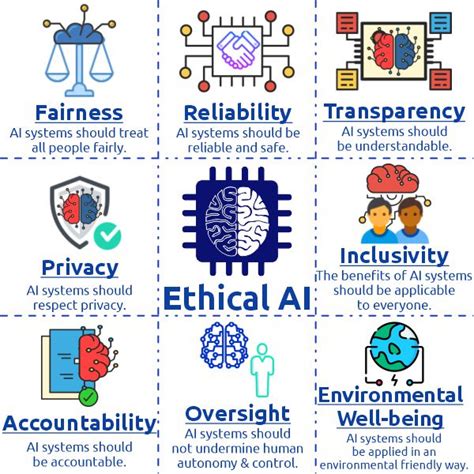

1. **Privacy Concerns**: AI systems that analyze and manipulate human emotions must respect user privacy. There is a risk that sensitive information could be exploited without consent.

2. **Autonomy**: The manipulation of emotions could undermine individuals’ autonomy, as they may be influenced to make decisions that do not align with their true beliefs or values.

3. **Addiction**: There is a concern that synthetic emotions could be addictive, leading to excessive use of AI-powered services and a diminished capacity for genuine human interaction.

**Synthetic Emotion Ethics 2030**

To address these concerns, the Synthetic Emotion Ethics 2030 initiative proposes a set of laws and regulations to protect individuals from AI manipulation of human feelings. These laws aim to:

1. **Regulate Data Collection and Use**: Mandate that AI systems collect and use data on human emotions in a transparent and responsible manner, with explicit consent from users.

2. **Set Boundaries on Emotional Manipulation**: Limit the scope of AI systems that can manipulate human emotions, ensuring that such technologies are used for beneficial purposes and not for harmful ones.

3. **Promote Transparency**: Require AI developers to disclose the use of synthetic emotions in their products, allowing users to make informed decisions about their interactions with these systems.

4. **Create an Oversight Body**: Establish a regulatory body responsible for monitoring and enforcing compliance with synthetic emotion ethics laws, ensuring that the rights of individuals are protected.

**Conclusion**

As AI continues to evolve, the potential for manipulation of human emotions is a significant concern. By implementing the Synthetic Emotion Ethics 2030 laws, we can ensure that the benefits of AI are balanced with the protection of our emotional well-being. It is crucial that we act now to create a regulatory framework that will shape the future of AI and synthetic emotions, safeguarding our humanity in the process.