Title: Autonomous Police Ethics: Predictive Policing Algorithm, Racial Bias Audits, and the Future of Law Enforcement

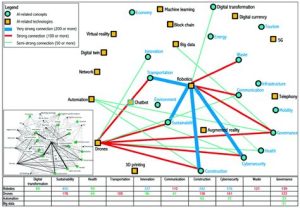

In recent years, the advent of autonomous technology has brought about a new era in law enforcement. With the integration of predictive policing algorithms, autonomous police vehicles, and even drones, the landscape of police work has been forever changed. However, as this technology continues to evolve, so does the importance of addressing the ethical implications and concerns surrounding its use. This article delves into the autonomous police ethics debate, focusing on predictive policing algorithms and racial bias audits.

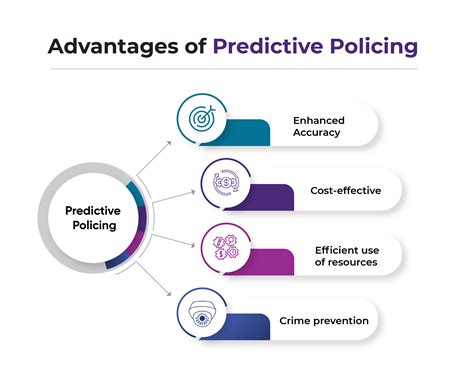

Predictive policing algorithms are designed to analyze historical data to identify patterns and predict future criminal activities. These tools aim to enhance the efficiency and effectiveness of law enforcement agencies, allowing them to allocate resources more strategically and reduce crime rates. While the potential benefits of predictive policing are substantial, there are several ethical concerns that need to be addressed.

One of the most pressing issues is the risk of racial bias within these algorithms. Historically, law enforcement has been marred by racial profiling, leading to disproportionate targeting of minority communities. The use of predictive policing algorithms can exacerbate these disparities if the data used to train the algorithms are not representative of the population. Racial bias audits are essential to ensure that these algorithms do not perpetuate existing inequalities.

Audits of predictive policing algorithms involve examining the data used to train the algorithms, as well as the algorithm’s performance in different demographic groups. The goal is to identify any biases and take steps to mitigate them. This process can involve several steps:

1. Data collection: Gathering a diverse and comprehensive dataset that reflects the community being served.

2. Data preprocessing: Ensuring that the data is free from errors and inconsistencies.

3. Feature selection: Identifying the most relevant variables for predicting criminal activity.

4. Model evaluation: Assessing the algorithm’s performance against different demographic groups.

5. Bias mitigation: Implementing strategies to reduce the impact of any identified biases.

Addressing racial bias in predictive policing algorithms requires collaboration between law enforcement agencies, technology developers, and community stakeholders. Some strategies for reducing bias include:

1. Inclusive data collection: Ensuring that the dataset includes diverse demographic groups, particularly underrepresented communities.

2. Continuous monitoring: Regularly reviewing the algorithms’ performance and updating them as needed to address any emerging biases.

3. Transparency: Making the algorithms’ methodologies and findings transparent to the public to foster trust and accountability.

4. Accountability: Establishing clear guidelines and consequences for violations of ethical standards.

The adoption of autonomous technology in law enforcement is a double-edged sword. While it holds the promise of more efficient and effective police work, it also raises serious ethical concerns, particularly regarding racial bias. By conducting racial bias audits and implementing strategies to mitigate these biases, we can ensure that autonomous police ethics are at the forefront of this technological revolution.

In conclusion, as the use of predictive policing algorithms and autonomous technology continues to grow, it is crucial to prioritize ethical considerations and address the risk of racial bias. Through collaborative efforts, continuous monitoring, and transparency, we can strive to create a future where law enforcement is both effective and equitable for all members of society.