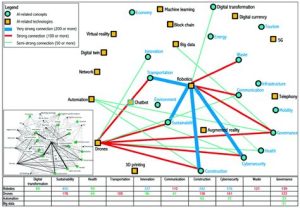

In the year 2028, the world has witnessed a rapid advancement in artificial intelligence (AI) technology. This has led to the emergence of a new form of warfare, known as algorithmic warfare. The North Atlantic Treaty Organization (NATO) has recognized the significance of AI in modern military conflicts and has consequently developed a comprehensive set of rules of engagement to govern the use of AI in combat scenarios. This article delves into the ethical considerations and the rules of engagement formulated by NATO for AI combat in 2028.

I. Ethical Considerations

The advent of AI in warfare has raised several ethical concerns. These concerns revolve around the potential misuse of AI, the risk of autonomous weapons, and the moral responsibility of military personnel. To address these issues, NATO has established the following ethical principles:

1. Human Oversight: AI systems must be designed and operated under human control, ensuring that decision-making authority remains with human operators.

2. Transparency: AI algorithms should be transparent to allow for scrutiny, accountability, and trust.

3. Avoidance of Harm: AI systems must be programmed to minimize harm to civilians and combatants alike.

4. Respect for Human Dignity: AI should not be used to dehumanize or degrade human beings.

5. Accountability: Those responsible for the design, development, and deployment of AI should be held accountable for any harm caused by its use.

II. NATO Rules of Engagement for AI Combat

To ensure that AI is used responsibly in combat scenarios, NATO has formulated a set of rules of engagement. These rules aim to protect human lives, maintain the integrity of the law of armed conflict, and promote international peace and security.

1. Human in the Loop: In all AI-assisted combat systems, there must be a human operator responsible for making the final decision regarding the use of force. This ensures that the moral and ethical aspects of warfare are not compromised.

2. Pre-Deployment Assessment: Before deploying AI systems in combat, a thorough risk assessment must be conducted to identify potential risks and ensure compliance with ethical principles and rules of engagement.

3. Limitation of Autonomous Weapons: Autonomous weapons systems, which can select and engage targets without human intervention, are prohibited unless explicitly authorized by NATO and in compliance with international law.

4. Protection of Civilians: AI systems must be programmed to minimize harm to civilians and civilian objects. This includes identifying and avoiding non-combatants and adhering to the principle of distinction.

5. Compliance with International Law: AI systems must comply with all applicable international law, including the laws of armed conflict and human rights law.

6. Data Privacy and Security: The use of AI in combat scenarios must protect the privacy and security of individuals, ensuring that personal data is not misused or compromised.

In conclusion, the 2028 NATO Rules of Engagement for AI Combat aim to strike a balance between technological advancement and ethical responsibility. By adhering to these rules, NATO seeks to mitigate the risks associated with algorithmic warfare while ensuring the protection of human lives and the maintenance of international peace and security.