In the rapidly evolving landscape of military technology, the advent of lethal autonomous weapons systems (LAWS) has raised profound ethical questions. These weapons, capable of selecting and engaging targets without human intervention, have the potential to reshape warfare. The United Nations (UN) has been at the forefront of addressing these concerns, with Resolution 78.4 focusing on the ethical implications of lethal AI decision-making trees.

The resolution, adopted by the UN General Assembly, underscores the urgency of addressing the potential misuse of lethal autonomous weapons. It highlights the need for a moratorium on the development, production, and use of such systems until international regulations can be established. The core of the resolution centers on the concept of decision-making trees within lethal AI systems.

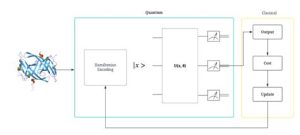

Decision-making trees are a type of machine learning algorithm used to make decisions based on a series of rules or conditions. In the context of lethal AI, these trees can be programmed to assess various factors, such as environmental conditions, enemy movements, and pre-defined rules, to determine whether to engage a target. However, the complexity and opacity of these decision-making processes raise significant ethical concerns.

One of the primary concerns is the potential for unintended consequences. With decision-making trees, it is difficult to predict the outcomes of a weapon’s actions. This uncertainty can lead to errors, such as attacking civilians or friendly forces, which could have devastating consequences. Resolution 78.4 calls for greater transparency and accountability in the development and deployment of lethal AI systems, emphasizing the need for human oversight to mitigate these risks.

Another critical issue addressed in the resolution is the potential for misuse. Lethal AI systems could be employed by rogue states or non-state actors, leading to conflicts with no clear rules of engagement. The resolution emphasizes the importance of international cooperation to prevent the proliferation of such weapons and to ensure that they are used responsibly.

Moreover, Resolution 78.4 raises questions about the very nature of warfare. As lethal AI systems become more sophisticated, they may blur the lines between traditional military forces and the civilian population. This raises ethical concerns about the protection of non-combatants and the principles of just war.

To address these challenges, the resolution proposes a number of measures. First, it calls for an international dialogue on the ethical, legal, and technical aspects of lethal AI. This dialogue would involve governments, scientists, ethicists, and other stakeholders to develop a comprehensive framework for the regulation of these weapons.

Second, the resolution encourages the development of international norms and standards to guide the use of lethal AI. These norms would aim to ensure that such systems are used in a manner that is consistent with international humanitarian law and human rights.

Lastly, the resolution calls for the establishment of a monitoring mechanism to oversee the implementation of the proposed measures. This mechanism would be responsible for identifying potential violations and facilitating the resolution of disputes.

In conclusion, the UN Resolution 78.4 on Lethal AI Decision-Making Trees represents a significant step forward in addressing the ethical challenges posed by autonomous war technologies. By emphasizing the need for transparency, accountability, and international cooperation, the resolution aims to ensure that lethal AI systems are developed and used responsibly. As the world continues to grapple with the implications of these technologies, it is crucial that the international community remains vigilant and proactive in shaping the future of autonomous warfare.