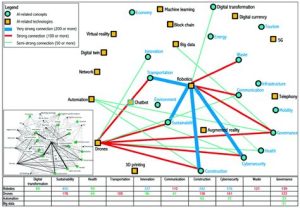

In the era of technological advancement, the concept of smart cities has gained significant traction. These urban environments are designed to leverage cutting-edge technologies to improve the quality of life for their residents. However, alongside these benefits, a new form of discrimination has emerged: algorithmic redlining 2.0. This article delves into the issue of AI mortgage approval bias in smart cities and its implications for societal equality.

### Understanding Algorithmic Redlining

Algorithmic redlining refers to the practice of using algorithms to discriminate against certain groups of people based on factors such as race, ethnicity, or socioeconomic status. This discrimination occurs when algorithms are designed or trained with biased data, leading to unfair outcomes in various sectors, including mortgage lending.

### The Evolution of Redlining

The original form of redlining dates back to the early 20th century, when banks used color-coded maps to identify neighborhoods that were considered risky for lending. These neighborhoods were predominantly African American and Hispanic, and banks denied loans to residents in these areas due to their racial and ethnic composition.

Today, algorithmic redlining 2.0 has taken a different form. With the advent of AI and machine learning, lenders can use algorithms to assess the creditworthiness of borrowers. However, these algorithms are not immune to biases, and they can perpetuate discrimination in mortgage lending.

### AI Mortgage Approval Bias in Smart Cities

Smart cities are characterized by their reliance on data-driven decision-making. While this can lead to numerous benefits, it also creates opportunities for algorithmic redlining 2.0. Here are some key factors contributing to AI mortgage approval bias in smart cities:

1. **Data Bias**: The algorithms used for mortgage approval are trained on historical data, which may reflect past discriminatory practices. This can result in biased outcomes for borrowers from marginalized communities.

2. **Algorithmic Black Boxes**: Many AI algorithms are considered “black boxes,” meaning that their decision-making processes are not transparent. This lack of transparency makes it difficult to identify and rectify biases within the algorithms.

3. **Lack of Diverse Representation**: When AI developers and data scientists come from homogeneous backgrounds, there is a higher chance that their perspectives will influence the algorithms in unintended ways, leading to biased outcomes.

### Implications for Societal Equality

The existence of AI mortgage approval bias in smart cities has significant implications for societal equality. Here are some of the key concerns:

1. **Access to Housing**: Discriminatory mortgage lending practices can limit access to affordable housing for marginalized communities, exacerbating the housing crisis.

2. **Economic Disparities**: By perpetuating discrimination, AI mortgage approval bias can widen economic disparities between different groups of people.

3. **Social Inequality**: Discriminatory practices in mortgage lending can contribute to broader social inequalities, such as disparities in education, employment, and health.

### Addressing Algorithmic Redlining 2.0

To combat algorithmic redlining 2.0 in smart cities, it is essential to implement the following measures:

1. **Ethical AI Development**: Encourage the development of AI algorithms that are designed to be fair, transparent, and unbiased.

2. **Diverse Representation**: Ensure that AI development teams are diverse and include individuals from marginalized communities to foster a more inclusive approach to algorithm design.

3. **Regulatory Oversight**: Implement regulations that require lenders to disclose the algorithms used for mortgage approval and ensure that they comply with ethical standards.

In conclusion, algorithmic redlining 2.0 is a significant challenge facing smart cities today. By addressing the issues of AI mortgage approval bias, we can work towards a more equitable and inclusive future for all residents.