In the digital age, the safety of children online has become a paramount concern. Child Sexual Abuse Material (CSAM) is a dark reality that has prompted a global effort to safeguard young users. This article explores the contrasting approaches of AI child protection using machine learning and human moderation in combating the spread of CSAM.

**AI Child Protection: The Power of Machine Learning**

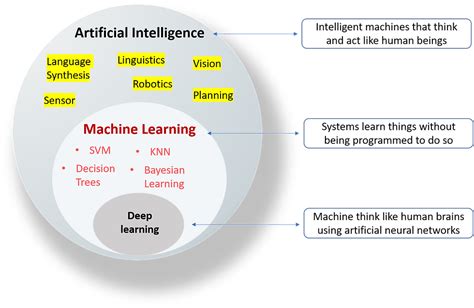

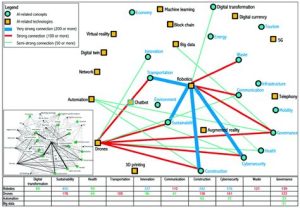

Artificial Intelligence (AI) has emerged as a powerful tool in the fight against CSAM. Machine learning algorithms, specifically, have the capability to analyze vast amounts of data and detect patterns that may indicate the presence of harmful content. Here are some key advantages of using AI for child protection:

1. **Speed and Efficiency**: Machine learning algorithms can process data at an unprecedented speed, enabling swift identification and removal of CSAM.

2. **Scalability**: As the volume of online content continues to grow, AI can scale to accommodate the increasing demand for monitoring and filtering.

3. **Consistency**: Unlike humans, AI is not prone to biases and can maintain consistent performance across different datasets.

4. **Real-time Monitoring**: AI systems can operate in real-time, providing immediate alerts when potentially harmful content is detected.

**Human Moderation: The Role of Human Moderators**

Despite the advancements in AI technology, human moderation remains an essential component of child protection efforts. Human moderators play a crucial role in several aspects:

1. **Complexity and Ambiguity**: AI systems may struggle with detecting complex or ambiguous content. Human moderators can provide the nuanced judgment required to discern between innocent and harmful content.

2. **Contextual Understanding**: Understanding the context in which content is shared is critical in determining its intent. Human moderators can interpret the nuances of human behavior and communication.

3. **Reporting and Feedback**: Human moderators can provide valuable feedback to AI systems, helping improve their accuracy and performance.

4. **Human Connection**: In cases where intervention is necessary, human moderators can offer support and guidance to users, fostering a sense of accountability and responsibility.

**Combining AI and Human Moderation: A Synergistic Approach**

To effectively combat the spread of CSAM, a synergistic approach that combines the strengths of AI and human moderation is essential. Here’s how this can be achieved:

1. **Initial Screening**: Utilize AI for the initial screening of online content, focusing on identifying the most likely cases of CSAM.

2. **Human Review**: Assign human moderators to review the flagged content, ensuring accurate identification and intervention.

3. **Continuous Learning**: Use the feedback from human moderators to refine AI algorithms, improving their performance over time.

4. **Education and Awareness**: Implement programs to educate both users and moderators about the dangers of CSAM, fostering a proactive approach to online safety.

In conclusion, AI child protection using machine learning and human moderation are both crucial in combating the spread of CSAM. By leveraging the strengths of each approach, we can create a more secure online environment for children, ensuring their safety and well-being in the digital world.